Three things you need to know about data cleanliness

Recently there was the 50th anniversary of putting a man on the moon and that revived the famous quote “We choose to go to the Moon …, not because they are easy, but because they are hard; because that goal will serve to organize and measure the best of our energies and skills.” I said something similar when a recent customer complained to me about data modeling and data cleansing. Yes, having clean data is hard, but it is what allows businesses to thrive and users to extend to new analytics and insights. The important point to remember is that it takes time, money and effort to clean your data and that must be balanced with the expected value the data can drive.

What is clean data, and how do you get it?

If you have read my other blogs, you already know that clean data is somewhat relative, because data by itself has no state. Clean data is relative to its intent or purpose, and for the most part clean data is consistent to the data domain (numeric are really numbers), agrees with correlated data (keeps relational integrity), and is complete to the model (not a lot of missing data columns).

Some of this effort can be validated when data is created, though companies are now getting data from many external sources. Cleansing data is an iterative process where the first step is around data completeness, and then as data is utilized you extend out to the accuracy and relationships. As data becomes more integral to your process, it should be subject to higher data cleansing standards.

Clean data drives your business outcomes

Yes, it is hard to have clean data, but the benefit is tremendous. Clean data allows for actionable analytics that drive retail stocking decisions, run effective marketing programs, and allows for Just-In-Time inventory at manufacturing plants. Every decision, made at every company, is improved when the data is better.

One example (anti-example?) of this is a company that had email addresses but found out that over 60% of them were invalid or not even properly formatted. Even worse, they were not retaining the bounce back to drive correction efforts. It is nearly impossible to have an effective customer outreach strategy when you do not even know how to connect with most of them!

Without clean data you won’t get good AI

Having clean data for analytics is a requirement, but when it comes to enabling the next wave of AI and machine learning, it is beyond critical. The worst data scientist with good data will always outperform the best data scientist with bad data.

When companies run algorithms that are not intuitively understood by humans, having bad or dirty data leads to outcomes which are “wrong,” but no one can understand why the algorithms went awry. Benignly, this can mean advertising to the wrong customer, but it can become life and death when used in the healthcare field or creating models for self-driving vehicles.

To learn more how AI leverages the clean data to create disruption across industries, check out this.

It’s time to clean up

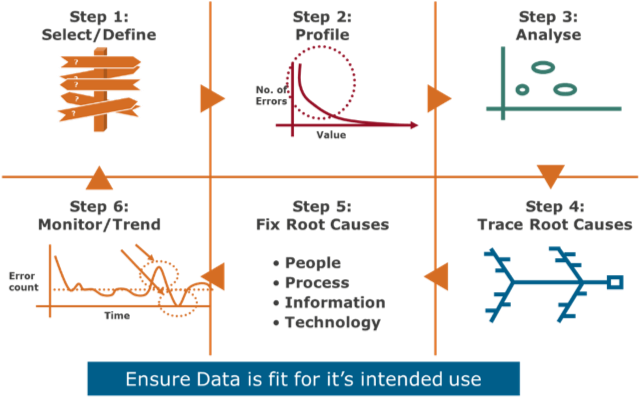

The call to action is to take a good hard look at your data and how clean is it. Data elements should have owners that vouch for, or at least define, how to test the cleanliness of the data. With that in place, a simple close loop process that detects, alerts, and corrects data can be established.

When you do need to update incorrect data make sure that you also fix the collection or transformation process so that new data will come in at a better level of quality. This is important as you need the data consistent throughout the streams, otherwise you make strategic decisions on data that never showed itself operationally, and that will stagnate efforts to become more real time in your analytics and hamper responsiveness to your customers.

I’ll close with another of my favorite quotes for this topic. It is from the movie “A League of Their Own” when the coach Jimmy Dugan says. “It's supposed to be hard. If it wasn't hard, everyone would do it. The hard... is what makes it great.”

Starting with Teradata in 1987, Rob Armstrong has contributed in virtually every aspect of the data warehouse and analytical processing arenas. Rob’s work in the computer industry has been dedicated to data-driven business improvement and more effective business decisions and execution. Roles have encompassed the design, justification, implementation and evolution of enterprise data warehouses.

In his current role, Rob continues the Teradata tradition of integrating data and enabling end-user access for true self-driven analysis and data-driven actions. Increasingly, he incorporates the world of non-traditional “big data” into the analytical process. He also has expanded the technology environment beyond the on-premises data center to include the world of public and private clouds to create a total analytic ecosystem.

Rob earned a B.A. degree in Management Science with an emphasis in mathematics and relational theory at the University of California, San Diego. He resides and works from San Diego.

View all posts by Rob Armstrong