“Fake news” and rumors have always been a prevailing theme in the history of mankind. Be it about politics or religion or games or entertainment or any other industry, no one has been spared by false news reports or misinformation. It is observed that with fake news there arises false hopes, misunderstandings, superstitions, riots and many more such adverse impacts to human society. With the advancement of technologies and the introduction of social media, the spread of fake news has reached its peak, leading to disharmony and unrest in most parts of the world.

Recently, with the outbreak of COVID-19, the world is in crisis and are people searching for ways to stay healthy. Many inaccurate reports are already circulating over different social media platforms giving tips on how to kill the virus. And most people, without any research, are considering those to be true and have started following them. This has created false hopes for health and, in extreme cases, sickness or loss of life.

The World Health Organization (WHO) mentions misinformation as a big concern and calls this spread of fake news an

Infodemic.

The UK has recently started a joint-campaign with WHO to fund a

new initiative to challenge misinformation and mistruths, which are dangerous and should be stopped at the source.

Some of examples of fake health news, myths and misinformation around the coronavirus can be found

here and

here.

These include:

- Inhaling steam kills coronavirus

- Taking hot water showers (60 degrees centigrade) cures Covid-19

- Drinking lemon water and garlic prevents coronavirus

- Drinking alcohol kills coronavirus

- Mosquito bites spread Covid-19

- Drinking bleach cures Covid-19

- Gargling saltwater prevents Covid-19

- Bathing with cow dung prevents Covid-19

Misinformation can be even more impactful when influential people

spread it.

Teradata recently organized a

Global Hackathon to find solutions to tackle the COVID-19 crisis, both from a medical and non-medical perspective.

There were around 70 great ideas submitted by various teams globally and 25 of those ideas were selected for the Hackathon. Our team was among the 25 selected.

Our Idea was to build a tool which can detect the misinformation of health news related to COVID-19.

The importance of such tools can be explained by the below:

- Fact Checkers

- Manual, slow and involves lot of effort

- Not accessible to everyone

- Usually domain experts – doctors to debunk myths

- Fact checking not the primary job for doctors

- Automated fake health news identification

- Completely automated

- Quick response

- Ability to learn by “examples”

- Ability to process a lot information frequently

- Cost effective

Our solution was emphasized mostly on the below points:

- Uses techniques in Artificial Intelligence

- Uses Supervised Learning – Learning with examples

- Completely automated with no human intervention

- Provides web based easy access – no apps required

- Provides API for seamless integration into 3rd party apps and chatbots

- Can be exposed as “software as a service” to identify fake health myths

- Can save human lives

Some of the use cases of automated tools:

- Users can check the veracity of the health news using this tool

- Publishers can use this tool to validate before publishing

- Naïve users can verify information before forwarding the information

- Social media networks can use this tool to validate the accuracy of the claim in the post/tweet itself

- Third party app developers can utilize our web-based API for integration into their apps

- Health chat bots can also leverage our API for first level verification.

Now let’s discuss our approach to bring a solution to the idea. We took the below steps:

- Collected a dataset of 1200+ fake/myths and genuine health news

- Around 600 were fake, 600 were genuine.

- Used NLP pipeline to do required modelling to learn the patterns of fake health tips and myths

- Created “Fake-o-meter” - an automated fake news classifier which tells the probability of the given news being fake; achieves an accuracy of 90%

- Used Vantage for training the models

- Created a web-based API which can be easily integrated with other apps

- Integrated with a web-based user interface in Flask for easy usage

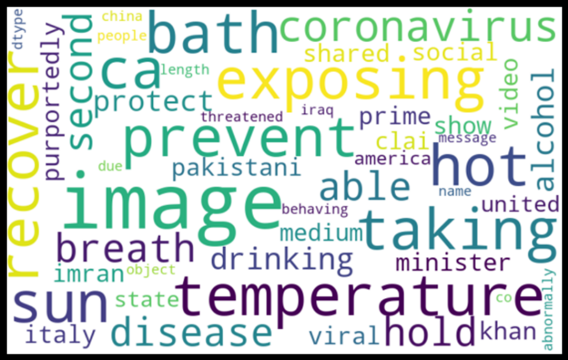

We even created Word clouds for both Genuine and Fake News data sets – the output was something like this.

Please note, the word cloud is made of the 1200+ datasets that we collected. It may differ with more datasets.

Genuine News

Fake News

Fake News

.png)

We also created WordClouds from Text Analysis in Vantage Appcenter showing the positive (Green) and negative (Red) sentiments involved with the datasets of COVID-19

.png) Below are the Implementation Details:

Below are the Implementation Details:

- Collected dataset from social media platforms and labeled them as ‘Fake’ or ‘Genuine’ accordingly by cross verifying proper sources

- Used these “examples” to train a Machine Learning model which can predict if a new claim is Fake or Genuine based on its past observations

- The NLP workflow involves stop-words removal, punctuations, lemmatizing/stemming, etc.

- Used Vantage text analytic functions to have a clean corpus and divide the datasets into Train and Validation sets

- Feature extraction techniques like TF-IDF* were used

- TextClassification functions in Vantage were used for creating a model from these features

- Use the model on test data sets and accuracy of the classification is measured

*As per

Wikipedia,

TF-IDF, short for Term Frequency–Inverse Document Frequency, is a numerical statistic that is intended to reflect how important a word is to a document in a collection or corpus. It is often used as a weighting factor in searches of information retrieval, text mining, and user modeling. The TF-IDF value increases proportionally to the number of times a word appears in the document and is offset by the number of documents in the corpus that contain the word, which helps to adjust for the fact that some words appear more frequently in general.

Results:

Below are some of the models used, along with the accuracy score:

.png)

Vantage functions used in the model training and prediction are as follows:

- NaiveBayesTextClassifierTrainer

- NaiveBayesTextClassifierPredict

- TextClassifierTrainer

- TextClassifier

- TextClassifierEvaluator

.png)

Although the model is ready for predicting the authenticity of news, it has a few limitations:

- Not a replacement for doctor/health care professional

- Has false positives and false negatives

- Any advice should be taken after consulting health care professionals

- Limited by the quality of training data. What goes in comes out!

There are some more topics to be covered for improving the tool. Below is some of the future work we can implement:

- Provide reference to the health knowledge base for more confidence

- Identify and crawl relevant information from the knowledge base

- Techniques for information extraction

- Automatically update the knowledge base

- Can use deep learning techniques when more data is available

As mentioned above, the FAKE-o-METER is the tool which checks the probability of your news being fake or genuine. As we have trained the model on only 1200 datasets, all messages may not give accurate predictions.

Machine Learning algorithms are always data greedy and data hungry. So, with more data fed into it, the model will become more reliable.

.png)

Below is one example of how to submit news. You can submit multiple messages in different lines

.png)

The output will show the authenticity of the News in percentage. The higher the percentage, the higher the probability that the news is fake.

.png) Question

Question: Above all these AI tools, models and algorithms, what is most powerful tool in reducing the spread of misinformation?

The

Answer is:

WE – the Humans.

If we stand united and stop promoting and propagating misinformation, we will be in much better shape to fight the pandemics of the future. Stop the Infodemic to help fight the Pandemic!

Dhruba Barman has been working with Teradata since 2012 in India and is part of Managed Services in Mumbai working as a Performance DBA. His daily tasks involve performance optimization to add value to customer's data which are in the form of jobs and reports. He provides Vantage training within Managed Services and Tech Talk sessions and is working actively on new the Vantage system for his customers, providing different ways of optimizing the new IFX system using the new features of TD16.20. He is interested in learning new technologies and actively participates in Machine Learning Hackathons, both inside and outside Teradata.

View all posts by Dhruba Barman

(Author):

Vijayasaradhi Indurthi

Vijay is an architect in Vantage Core Product Engineering team in Hyderabad, India. His interests include Text Analytics, Natural Language Processing and Machine Learning.

View all posts by Vijayasaradhi Indurthi