In the second installment of this blog, we introduced machine learning as a subfield of artificial intelligence (AI) that is concerned with methods and algorithms that allow machines to improve themselves and to learn from the past. Machine learning is often concerned with making so-called “supervised predictions,” or learning from a training set of historical data where objects or outcomes are known and are labelled. Once trained, our machine or “intelligent agent” is enabled to differentiate between, say, a cat and a mat.

The currently much-hyped “deep learning” is shorthand for the application of many-layered artificial neural networks (ANNs) to machine learning. An ANN is a computational model inspired by the way the human brain works. Think of each neuron in the network as a simple calculator connected to several inputs. The neuron takes these inputs and applies a different “weight” to each input before summing them to produce an output.

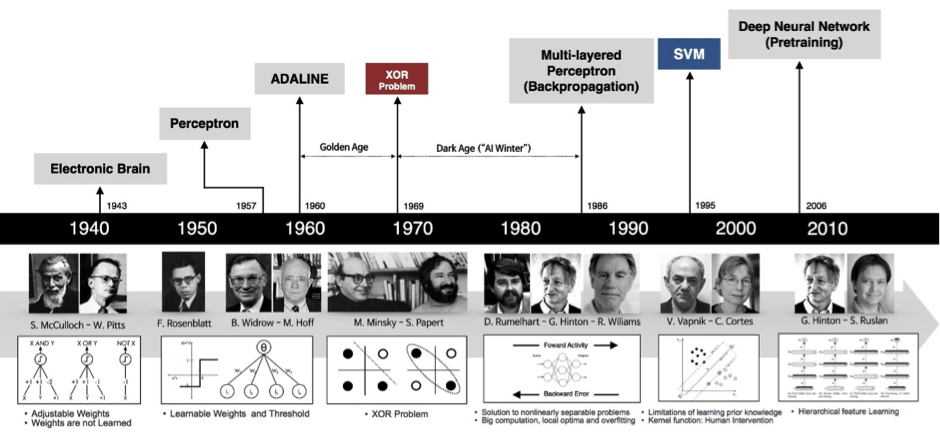

If you’ve followed this so far, you might be wondering what all the fuss is about. What we have just described — take a series of inputs, multiply them by a series of coefficients and then perform a summation — sounds a lot like boring, old linear regression. In fact, the perceptron algorithm — one of the very first ANNs constructed — was invented way back in 1957 at Cornell to support image processing and classification (class = “cat” or class = “mat”?). It was also much-hyped, until it was proven that perceptrons could not be trained to recognize many classes of patterns.

Research into ANNs largely stagnated until the mid-’80s, when multilayered neural networks were constructed. In a multilayered ANN, the neurons are organized in layers. The output from the neurons in each layer passes through an activation function — a fancy term for an often nonlinear function that normalizes the output to a number between 0 and 1 — before becoming an input to a neuron in the next layer, and so on, and so on. With the addition of “back propagation” (think feedback loops), these new multilayer ANNs were used as one of several approaches to supervised machine learning through the early ’90s. But they didn’t scale to solve larger problems, so couldn’t break into the mainstream at that time.

The breakthrough came in 2006 when Geoff Hinton, a University of Toronto computer science professor, and his Ph.D. student Ruslan Salakhutdinov, published two papers that demonstrated how very large neural networks could work much faster than before. These new ANNs featured many more layers of computation — and thus the term “deep learning” was born. When researchers started to apply these techniques to huge data sets of speech and image data — and used powerful graphics processing units (GPUs) originally built for video gaming to run the ANN computations — these systems began beating “traditional” machine learning algorithms and could be applied to problems that hadn’t been solved by other machine learning algorithms before.

Milestones in the development of neural networks (Andrew L. Beam)

But why is deep learning so powerful, especially in complex areas like speech and image recognition?

The magic of many-layered ANNs when compared with their “shallow” forebears is that they are able to learn from (relatively) raw and very abstract data, like images of handwriting. Modern ANNs can feature hundreds of layers and are able to learn the weights that should be applied to different inputs at different layers of the network, so that they are effectively able to choose for themselves the “features” that matter in the data and in the intermediate representations of that data in the “hidden” layers.

By contrast, the early ANNs were usually trained on handmade features, with feature extraction representing a separate and time-consuming part of the analysis that required significant expertise and intuition. If that sounds (a) familiar and (b) like a big deal, that’s because it is; when using “traditional” machine learning techniques, data scientists typically spend up to 80 percent of their time cleaning the data, transforming them into an appropriate representation and selecting the features that matter. Only the remaining 20 percent of their time is spent delivering the real value: building, testing and evaluating models.

So, should we all now run around and look for nails for our shiny, new supervised machine learning hammer? We think the answer is yes — but also, no.

There is no question that deep learning is the hot new kid on the machine learning block. Deep learning methods are a brilliant solution for a whole class of problems. But as we have pointed out in earlier instalments of this series, we should always start any new analytic endeavour by attempting to thoroughly understand the business problem we are trying to solve. Every analytic method and technique is associated with different strengths, weaknesses and trade-offs that render it more-or-less appropriate, depending on the use-case and the constraints.

Deep learning’s strengths are predictive accuracy and the ability to short-circuit the arduous business of data preparation and feature engineering. But, like all predictive modeling techniques, it has an Achilles’ heel of its own. Deep Learning models are extremely difficult to interpret, so that the prediction or classification that the model makes has to be taken on trust. Deep learning has a tendency to “overfit” — i.e., to memorise the training data, rather than to produce a model that generalizes well — especially where the training data set is relatively small. And whilst the term “deep learning” sounds like it refers to a single technique, in reality it refers to a family of methods — and choosing the right method and the right network topology are critical to creating a good model for a particular use-case and a particular domain.

All of these issues are the subject of active current research — and so the trade-offs associated with deep learning may change. For now, at least, there is no one modeling technique to rule them all — and the principle of Occam’s razor should always be applied to analytics and machine learning. And that is a theme that we will return to later in this series.

For more on this topic, check out this blog about the business impact of machine learning.

Martin leads Teradata’s EMEA technology pre-sales function and organisation and is jointly responsible for driving sales and consumption of Teradata solutions and services throughout Europe, the Middle East and Africa. Prior to taking up his current appointment, Martin ran Teradata’s Global Data Foundation practice and led efforts to modernise Teradata’s delivery methodology and associated tool-sets. In this position, Martin also led Teradata’s International Practices organisation and was charged with supporting the delivery of the full suite of consulting engagements delivered by Teradata Consulting – from Data Integration and Management to Data Science, via Business Intelligence, Cognitive Design and Software Development.

Martin was formerly responsible for leading Teradata’s Big Data Centre of Excellence – a team of data scientists, technologists and architecture consultants charged with supporting Field teams in enabling Teradata customers to realise value from their Analytic data assets. In this role Martin was also responsible for articulating to prospective customers, analysts and media organisations outside of the Americas Teradata’s Big Data strategy. During his tenure in this position, Martin was listed in dataIQ’s “Big Data 100” as one of the most influential people in UK data- driven business in 2016. His Strata (UK) 2016 keynote can be found at: www.oreilly.com/ideas/the-internet-of-things-its-the-sensor-data-stupid; a selection of his Teradata Voice Forbes blogs can be found online here; and more recently, Martin co-authored a series of blogs on Data Science and Machine Learning – see, for example, Discovery, Truth and Utility: Defining ‘Data Science’.

Martin holds a BSc (Hons) in Physics & Astronomy from the University of Sheffield and a Postgraduate Certificate in Computing for Commerce and Industry from the Open University. He is married with three children and is a solo glider pilot, supporter of Sheffield Wednesday Football Club, very amateur photographer – and an even more amateur guitarist.

View all posts by Martin Willcox

(Author):

Dr. Frank Säuberlich

Dr. Frank Säuberlich leads the Data Science & Data Innovation unit of Teradata Germany. It is part of his repsonsibilities to make the latest market and technology developments available to Teradata customers. Currently, his main focus is on topics such as predictive analytics, machine learning and artificial intelligence.

Following his studies of business mathematics, Frank Säuberlich worked as a research assistant at the Institute for Decision Theory and Corporate Research at the University of Karlsruhe (TH), where he was already dealing with data mining questions.

His professional career included the positions of a senior technical consultant at SAS Germany and of a regional manager customer analytics at Urban Science International. Frank has been with Teradata since 2012. He began as an expert in advanced analytics and data science in the International Data Science team. Later on, he became Director Data Science (International).

His professional career included the positions of a senior technical consultant at SAS Germany and of a regional manager customer analytics at Urban Science International.

Frank Säuberlich has been with Teradata since 2012. He began as an expert in advanced analytics and data science in the International Data Science team. Later on, he became Director Data Science (International).

View all posts by Dr. Frank Säuberlich