Do I have to bring my reusable bag to go the Data Marketplace? Do they sell fish and apples made of bytes? Maybe you, like me, are also confused about what a Data Marketplace is, so let me try to explain the basics.

When we talk about a Data Marketplace, we are embracing the concept of Data Sharing and Data Democratization, or in other words, looking for a simple and controlled way of putting data in users’ hands. Accessing data must be simple and in one place -- reducing the data movement as much as possible but keeping a very controlled governance of the data and the security, both from an access and privacy perspective.

Let’s explore three different scenarios enabled by a Data Sharing strategy

Self-Service.

This is probably the most common use, a key part of Data Democratization. Users interact with tools that provide them the capability of having a known path to the desired data, which is held in a centralized platform. Data movement i, at its minimum as the data has been curated and integrated before being published. The analysis is usually done in place by leveraging tools and platforms capabilities. Metadata and lineage management are core to build this strategy or your Marketplace would become a Garage Sale instead, where you have no guarantee what will be there or the quality of the product.

-

Let’s run an example: use a visualization tool to query and navigate consolidated (dimensional, aggregated) customer data sitting in Teradata Vantage so users can build their own Dashboards while leveraging (on the fly) web navigation records stored in an object storage via the Native Object Storage (NOS) feature and apply some pathing analytics on top of it (using Vantage nPath function). Or run ad-hoc queries on top of a normalized model on Vantage to prepare data for the next training set for a fraud model using Python.

-

These capabilities should be driven by metadata collected after dozens or hundreds of executions on the data and the collaborative effort of curating reports, queries and content (aka crowdsourcing). The key theme is agility & data accessibility in a one-stop-shop, reducing replication.

External Data Consolidation.

Bringing outside data into your company’s repository looks simple but there are a couple of aspects to consider, like data quality and integration. Sources that bring complementary information may help enhance the enterprise’s customer or context data but curating it can lead to more than a headache. It is key for success that the ingestion pipeline is agile and easy (APIs and Object Storage can help on this approach) so that users can “subscribe” to sources and feed them into their Sandboxes for exploration. Again, this should be done on the same platform to minimize data movement.

The power of NOS and Vantage DataLab can help by making it simple to feed data into Object Storage and linking it with production data where advanced functions should be used to curate it while maintaining governance rules.

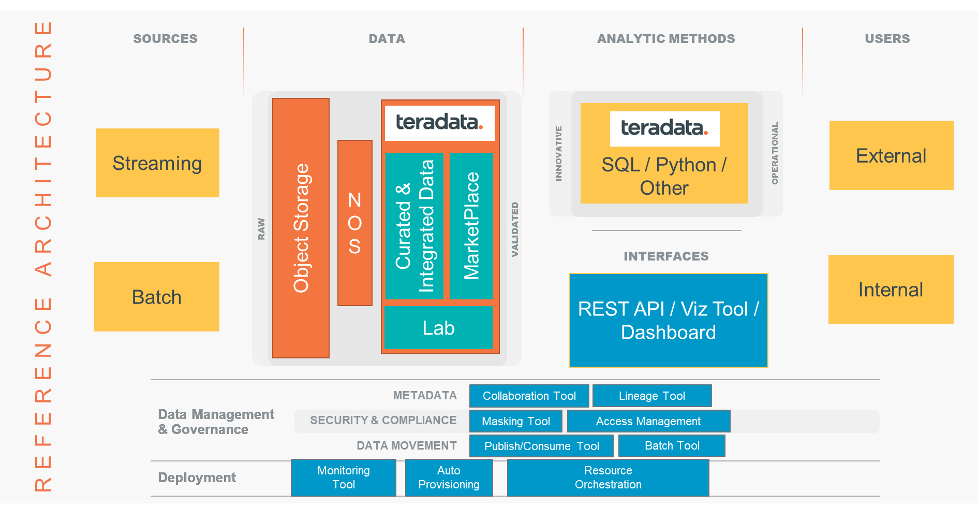

Figure 1. Marketplace Reference Architecture

Figure 1. Marketplace Reference Architecture

Data Publication.

“We have a great amount of data on our business that others would pay for….” why wouldn’t you be willing to share it externally? This is the latest and greatest theme for Data Sharing -- making data that has been curated and integrated by the enterprise externally available. This brings special attention to data privacy and security, as we don’t want to disclose private or confidential information to third parties (usually this kind of shared data should be aggregated and anonymized) but also we don’t want to create a security breach, leaving anyone the right to access other production data. This strategy can also provide self-monitoring to customers or providers by building KPIs and presenting them on dynamic Dashboards (examples can be Vendor shipments SLAs or customer billing trends).

By working with security roles and rights and the Query Services feature, you can easily implement a sharing strategy via REST APIs on Vantage, while leveraging the power of the MPP platform to scale processing as the demand for external data grows. Furthermore, you can run Vantage delivered-as-service, in the cloud, to Scale Out as needed to manage spikes on demand for data consumption, orchestrated with Teradata’s advanced Workload Management capabilities. The impactful part of this solution is that we can finally monetizeour efforts on integrating data (boring? Not anymore!).

A good example of both sharing data externally AND integrating external data is Teradata’s COVID-19 Resiliency Dashboard where you can see how publicly available sources can be brought together to help companies plan and review their strategy to fight COVID-19 challenges.

.png)

Figure 2. Resiliency Dashboard

As mentioned in my previous post, be sure to apply a clear Data Strategy to your Data Sharing initiatives or you will be swimming in a sea of confusion, with external users accessing private data, exhausting resources by not identifying and governing the workloads and SLAs, as well as many other pains.

(Author):

Sebastian Barreda

Sebastian Barreda is an Ecosystem Architect with 15 years of working with Data and Analytics Solutions. He worked in Teradata Consulting in many roles, from ETL and BI development to Requirement gathering and Logical Data Modeling, were he gained practical experience on these many topics. Later, he advanced his career to a Solution Architecture role, working on analyzing customer’s business and technical requirements to translate them into products, solutions, and services, understanding the key link between business needs and technology enablers, leveraging cloud, open source and the so called Big Data tools and solutions. He worked on delivering Data Strategy advisory on several Industries like Retail, Manufacturing, Communications, Media & Entertainment and Banking.

View all posts by Sebastian Barreda