Over the last 7 years, there have been dozens of Hadoop and Spark benchmarks. A vast majority of them are a scam. Sometimes the perpetrators are ignorant of how to do a benchmark correctly (i.e. Hanlon’s Razor.) But too often, some zealot just wants to declare their product the winner. They ignore or adjust the facts to fit their objective. The novice data management buyer then makes decisions based on misleading performance results.

This leads to slow performing implementations if not outright purchasing disasters. Business users are then told to abandon slow running queries, they don’t need them. (Yeah, right.) That company then falls behind their competitors who made better purchasing decisions. Never forget: query performance is vital for employee productivity and results accuracy. When hundreds of employees make 1000s of informed decisions every day, it adds up.

Today’s misleading big data benchmarks mirror historical misbehavior. In the 1990s, poorly defined vendor benchmarks claimed superiority over competitors. Customers got bombarded with claims they couldn’t understand nor use for comparisons. The Transaction Processing Council was formed to define standard OLTP and decision support benchmark specifications. TPC benchmarks require auditors, full process disclosure, and pricing to the public. TPC-DS and TPC-H are the current decision support benchmark specifications. Ironically, TPC-DS and the older TPC-H queries are used in nearly all tainted benchmark boasts. IBM Research complained loudly about Hadoop TPC-DS benchmarks back in 2014. But the fake news continues, sometimes called bench-marketing. But most of today’s misleading benchmarks come from academics and vendor laboratories, not marketing people.

Here are some of the ways Hadoop benchmark cheating occurs.

Grandpa’s hardware. Comparing old hardware and software to new is the oldest trick in the book. Comparing a 2012 server with four cores to 2017 servers with 22 cores its pure cheating. Furthermore, each CPU generation brings faster DRAM memory and PCI busses. Is it any surprise the new hardware wins all benchmark tests? But then some startup vendor publishes “NoSQL beats Relational Database X”, leaving out inconvenient details. There’s a variation cheat where hard disk servers are compared to SSD only servers. Solution: publish full configuration details. And always use the same hardware and OS for every comparison

SQL we don’t like. None of the SQL-on-Hadoop offerings support all the ANSII SQL-99 functions. The easy solution is to discard SQL the product cannot run. While TPC-DS has roughly 100 SQL queries, no Hadoop vendors report more than 25 query results. Worse, they modify the SQL in ways that invalidates the purpose of the test. Solution: Disclose all SQL modifications.

Cherry pickers. By reporting only the query tests that they win, the vendor claims they beat the competitor 100% of the time. These are easy to spot since every bar chart shows the competitor losing by 2X to 100X slower. Solution: publish all TPC test results. But this will never happen which is why customers must run their own benchmarks

Tweaks and no tweaks. First, install the competitor out-of-the-box product, preferably a back level version. Run the tests. Next load the cheater products on the system. Now tweak, tune, and cajole the cheater software to its' highest performance. Too bad the competitor couldn't do any tweaks. Solution: test out of the box products only

Amnesia cache. Run the benchmark a few times so that all the data ends up in memory cache. Run it again and “Wow! That was fast.” Next, reboot and run the competitor software with empty memory caches forcing it to read all the data from disk. Surprise! The competitor loses. Solution: clear the in-memory cache before every test.

Manual optimizer (an oxymoron). Lacking an optimizer, Hadoop and Spark systems process tables in the order encountered in the SQL statement. So programmers reorder the SQL clauses for the best performance. That seems fair to me. But if a Tableau or Qlik user issues a query, those tweaks are not possible. Oops --not fair to the users. Solution: run the TPC test SQL ‘as-is’ without reordering.

Ignorance is easy. This is common with university students who lack experience testing complex systems. Benchmarking is a career path, not a semester’s experiment. Controlling all the variables is much harder than most people know. There are many common mistakes that students don’t grasp and repeatedly apply. Solution: be skeptical of university benchmarks. Sorry about that Berzerkly.

Scalability that is not. Many benchmarks double the data volume and call it a scalability test. Wrong. That’s a scale-up stress test. Scale-out is when more servers are added to an existing cluster. Solution: Double the data and the cluster hardware. If response time is within 5% of the prior tests, it’s a good scalability test. Note to self: Teradata systems consistently scale-out between 97-100%.

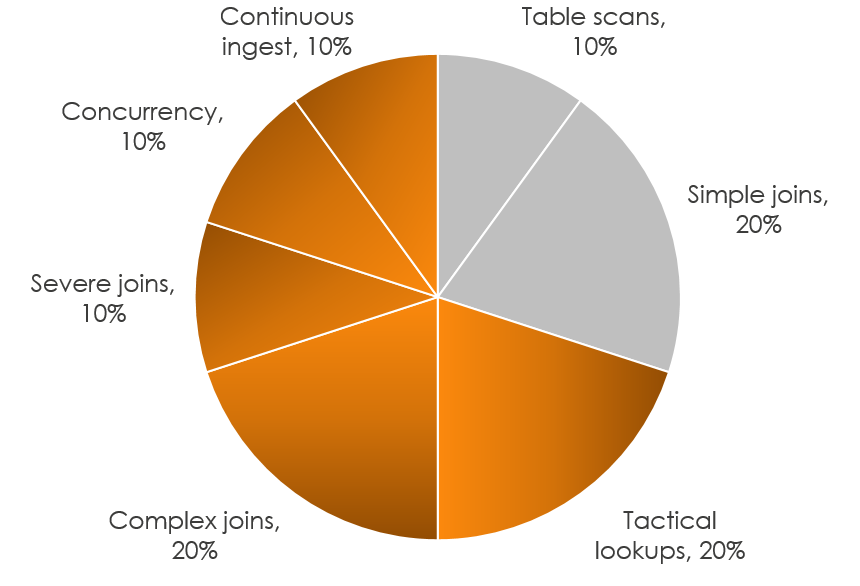

Teradata abandoned TPC testing in 2003. TPC workloads represent the easiest queries our customer’s run. We find business users evolve from simple queries to increasingly more complex analytics. Which is how the phrase “query from hell” was born. Missing from the simplistic TPC tests are:

- Continuous ingest of data into tables being queried

- Testing 100-1000 concurrent users. This is exceptionally difficult to do correctly

- Severely complex joins with non-colocated fact tables (matching keys on different nodes)

- Complex joins -- 20-50 table joins with 4-10 huge fact tables

TPC-DS testing falls into the gray pie slices shown. The gray slices are data mart workloads while the entire pie chart is a data warehouse workload. Table scans represent 7-10% of a data warehouse workload but they are 60-80% of Hadoop and Spark workloads. Simple joins combine many small dimension tables with a couple fact tables. Tactical lookups in Hadoop must run in HBase to achieve sub-second performance. Which is like saying “Let's install two RDBMS systems: one for decision support, and one for tactical queries and keep them in sync.”

Most Teradata customers run 100 to 1000 concurrent users all day long. We call these Active Data Warehouses. ADWs deliver real time access, continuous data loading, and complex analytics simultaneously. It’s not unusual for a Teradata customer to run ten million queries a day while loading data every five minutes in eight concurrent batch jobs. One customer has a 50 page SQL statement they run sub-second. Others have 100-200 page SQL statements generated by modern BI tools. All this runs with machine learning grinding along in the ‘severe joins’ category.

One way to tell if a benchmark is honest is that the publisher loses some of the test cases. Teradata has published two such results:

-

WinterCorp compares Hadoop to the Teradata 1700 system (2015)

-

Radiant Advisors comparing SQL-on-Hadoop software (2016)

We encouraged these independent analysts to report all test results, even where our products did not win. Why? We want customers to use the right tool for the workload. These independent analysts pushed back occasionally.

We learned a lot from each other.

Custom benchmarks tests are the only way to make an informed platform decision. It’s time consuming and expensive for both the vendor and the customer. But it’s vital information. Product performance has a huge influence on business user productivity and accuracy. Yet, benchmark performance is a small part of a platform selection decision. Other product selection criteria of equal importance includes:

- Total cost of ownership

- BI and ETL software interoperability

- Customer references you can talk to

- Governance capabilities

- Security features

- High availability vetting

- Public and hybrid cloud support

- System administration tools

- Ease of use and flexibility for business users

- Analyst reports and inquiries

Platform selection is a complex task. If you need help, Teradata has an unbiased professional services engagement to crawl through all the issues. The results of those comparisons show some workloads go on Hadoop, some on the data warehouse, some on other platforms. We practice best fit engineering and you should too.

I hope you now will realize the real lies with your real eyes.

Or trust in Dilbert’s answer “A misleading benchmark test can accomplish in ten minutes what years of good engineering can never do.”

With over 30 years in IT, Dan Graham is currently the Technical Marketing Director for the Internet of Things at Teradata. He has been a DBA, Product Manager for the IBM RS/6000 SP2, Strategy Director in IBM’s Global BI Solutions division, and General Manager of Teradata’s high end 6700 servers. At IBM he managed four benchmark centers including large scale mainframes and parallel PSeries systems.

View all posts by Dan Graham