Ok, I’m going to say something unpopular now. This is a message for the industrial companies, the ones who build, operate, maintain, and rely on large, complex assets, processes, capital equipment, systems, and machinery. Some of these assets have been used by companies for 20+ years.

If you have been reading the popular press, you have been fed a line and a vision of a bright, beautiful, and imminent future. You’ve been told about the coming of Industry 4.0. You've been sold a vision of automation--robotics, IoT, 3D printing, 5G, and artificial intelligence (AI). It's been conveyed, that where AI is present, autonomous and magic decisions take place.

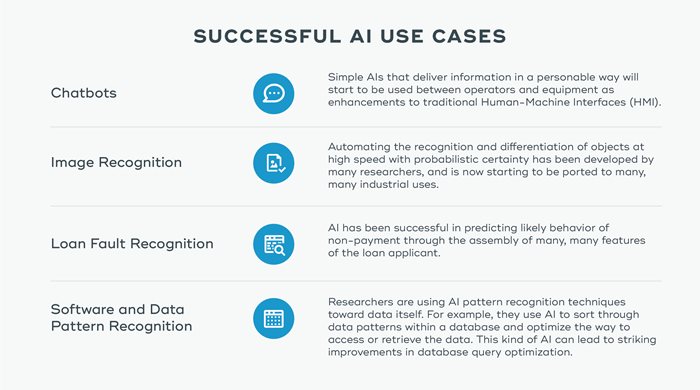

Yes, it’s true that AI is making great inroads in certain areas like chatbots,

image recognition and

loan fault prediction.

Yet, in the instance of AI for industrial use, you have been mainly fed a pack of lies. Instead, let me debunk a few common myths about AI.

5 Myths You Have Been Told About Industrial Artificial Intelligence

Myth 1: Plug in your data; out pops intelligence.

Recently, so-called “cognitive AI” was applied to a series of predictive maintenance problems in the hopes that by simply ingesting large heaps of machine data, the AI could predict which component part of the equipment would fail next. The AI would then predict the best order in which to maintain the equipment. The result was an utter failure to make intelligent predictions. The AI could predict events that were obviously bound to happen but was unable to provide context for the prediction or an explanation as to why. That’s because to work, predictive science must rely on a cognitive or ontological model of the world it’s trying to predict. In the business use context, the AI would utilize data like the equipment type, location, the components of the equipment and how the equipment has been used in its previous life cycle to determine the relevant information. However, without great effort, these data points are typically not available to the data scientist trying to build the AI, unless the company has established its data foundation, or digital thread.

Myth 2: AI allows you to skip a bunch of steps.

Nope. AI actually needs more steps. You still have to do all the data processes to create an up-to-date data foundation—what I described as creating your digital thread. But let’s say you already have conquered those challenges. AI may enable you to skip some of the feature engineering done by humans, but it will do many more iterations of that engineering, requiring huge amounts of computational power. Layers and layers of computational power is required, which is why, incidentally, it’s called “deep learning.” This brings us to the real problem here: the AI is highly dependent on the very manual process of labeling the data to allow the AI to learn to predict or perceive, or distinguish accurately. Acquiring a labeled dataset still requires vast quantities of manually labeled input data in order to feed the AI. In fact, whole new businesses have emerged, describing themselves as a “TaskRabbit for data” including Gengo and CloudFactory. These new entrants to the “gig economy” are serving the need for manual labor to build these datasets. For industrial applications, labeling is a very simple word for the hard work involved in that process. Labeling often involves things like tearing down an electronic device to determine where the failure occurred, examining a machine in a failure analysis lab, and x-raying a high-pressure hose to determine if there were defects that lead to its failure. These so-called “labels” are very, very expensive in the industrial world.

Myth 3: AI will replace your workers because it mimics human intelligence.

AI will only replace workers that are performing very simple and narrow tasks--jobs that will be automated very shortly in the future anyway. AI in today’s world is good for tasks that a child could do, these tasks are often those that could be completed in a 15 second timeframe, like differentiating one object from another, detecting sentiment, or recognizing a pattern within a shape. What can AI do better than humans? It can sort through a seemly vast number of details and bring organization to the data.

Myth 4: AI can explain why something happened.

Up until recently, AI was very black box, which means it couldn’t explain how it came to its conclusion or what led to a certain prediction. Now, some types of AI models and techniques provide a pathway to its reasoning, but this doesn’t replace the scientific method for determining causal links. In industrial settings, it’s critical for us to know why something is breaking, that way we can fix the reason. If we don’t find the contributing factors, and make the appropriate adjustments, the issue is going to happen again.

Myth 5: AI can predict the future.

No,

AI cannot predict the future and it certainly cannot make the types of predictions industrial use requires. AI can’t make predictions it can only verify a fact. In industrial use cases, we need more meaningful predictions, like “Which characteristics of a fan blade indicate that it may jam in the future?” In order for the AI to make an educated guess you’ll need to utilize a lot of different data points. This data would include fan blade sensor readings, as well as associated motor failures. You also need to assemble your digital thread or “model of the world” to make sense of all that sensor data. The problem is, once you’ve compiled all of the necessary factors, you have probably assembled enough real human intelligence to make the determination without the help of AI. However, if we want AI to make these kinds of predictions in lieu of humans, this will take place in the future and certainly not today.

The Truth About AI

Don’t get me wrong, AI stills bears huge promise with the prospect of automating so many tedious, or physically different tasks. But just as we’ve seen the promise of autonomous driving technology, which is one of the most popular of all industrial AI use cases in existence, many barriers loom large. It’s easy for us to buy into the fantasy of a simpler, more automated world, but it’s most important to remember the work and process required to produce the right outcomes.

Cheryl Wiebe is an Ecosystem Architect in Teradata’s Data and Analytics Strategy team in the Americas region, and works from her virtual office in Southern California. Her focus is on the business, data, and applications areas of analytic ecosystems. She has spent years working with customers to help create a digital strategy in which they can bring together sensor data and other machine interaction data, connect it with other enterprise and operational domain data for the betterment of the reliability and efficiency of large equipment, large machinery, and other large (and expensive) assets, as well as the supply chain and extended value chain processes around those assets.

Industry-spanning programs, such as Industry 4.0 and others that address enterprises in their goals to “go digital” in a journey to the cloud, are where Cheryl focuses. She helps companies leverage traditional and new IoT settings to organize and develop their business, data and analytic architectures. This prepares them to build analytics that can inform the digital enterprise, whether it’s in Connected Vehicle services, Smart Mobility, Connected Factories, and Connected Supply Chain, or specialized solutions such as Industrial Inspection / Vision AI solutions that address needs to replace tedious work with AI.

Cheryl’s background in Supply Chain, Manufacturing and Industrial Methods stem from her 12+years in management consulting, industrial/high tech, analytics companies, and Teradata, where she’s spent the last 18 years.

View all posts by Cheryl Wiebe